Privacy statement: Your privacy is very important to Us. Our company promises not to disclose your personal information to any external company with out your explicit permission.

This article refers to the address: http://

With the advancement of technology, people have been working on the research of convenient and efficient transportation institutions. The omni-directional motion mechanism achieves a high degree of flexibility in a small space with its full freedom of movement in the plane, and has broad application prospects in many aspects such as military, industrial, and social life. Human-computer interaction with human posture and gestures is a novel and natural way of interaction. People can perform rapid human-computer interaction through simple body language, which is easy to implement and flexible. Kinect is a somatosensory recognition device developed by Microsoft Corporation, which can realize somatosensory recognition and human-computer interaction.

Therefore, this paper designs a model of somatosensory control omnidirectional transportation platform using Mecanum wheel. For this model control system, two kinds of somatosensory control modes based on kinect sensor-based bone motion information recognition and deep gesture recognition are proposed. The two scenarios applied to the transport platform: when the platform is integrated into remote devices such as mobile robots, The operator has a wide control environment and can apply various postures of the human body for fine control. When the transportation platform is integrated into a short-range control device such as a wheelchair or a forklift, the operator is located on a narrow device, and the short-distance gesture can be applied for simple, fast and efficient operation. Manipulation. The experimental results show that the two control modes of the control system can well control the omnidirectional transportation platform.

1 Model construction and kinematics analysis of omnidirectional transportation platform

1.1 omnidirectional platform construction

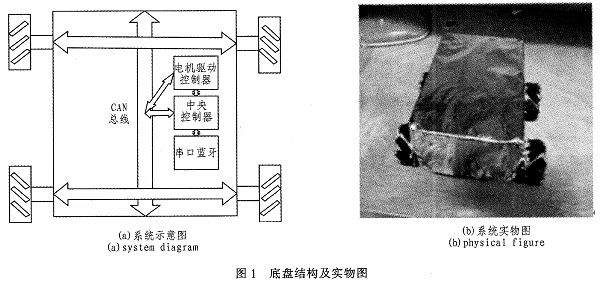

The omni-directional mobile platform constructed in this paper is shown in Figure 1(a). It consists of aluminum 60mm 45 degree universal wheel, DC motor, motor drive module, 12V lithium battery, MSP430f149 minimum system control board, serial Bluetooth and other components. constitute. The platform controller reads the data sent by the host computer through the Bluetooth to perform the corresponding action correspondingly, and FIG. 1(b) is the model object.

1.2 Omni-directional kinematics analysis and manipulation

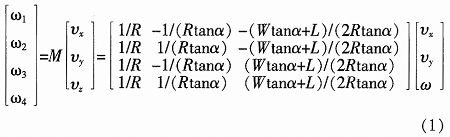

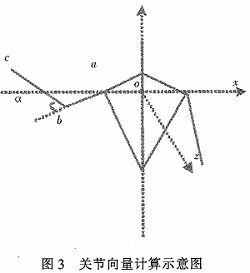

As shown in Fig. 2(a), the principle structure of the Mecanum wheel is such that a small roller with α=45 degrees to the axis of the wheel is distributed around the main wheel. The roller can rotate itself while rotating around the axle, so that the main wheel has two degrees of freedom of rotation about the axle and movement in the direction perpendicular to the axis of the roller. Figure 2 (b) shows the chassis motion mechanics analysis. The kinematics equation of the platform is obtained by analyzing the motion of the wheel:

In the formula, (1) Vx, Vy, and ω are control quantities. In this paper, the PWM drive signal Cn is generated by the single-chip microcomputer to realize the chassis drive control. From equation (2), Cn is the power of the nth motor, ωn is the calculated speed of the nth motor, ωmax is the speed at the maximum output power set by the n motor at the same voltage, mn is the maintenance 4 The measured parameters of the motor speed at the same maximum.

![]()

2 Human body depth image and bone information acquisition

The kinect sensor is used to obtain the depth image and bone information of the human body. It is composed of modules such as RGB color camera, infrared emitter, and infrared CMOS camera, and can obtain depth image data and RGB image data of the target object. Based on the depth image data, bone tracking technology is used to extract human bone information.

3 skeletal motion information recognition control mode design

3.1 joint angle calculation method

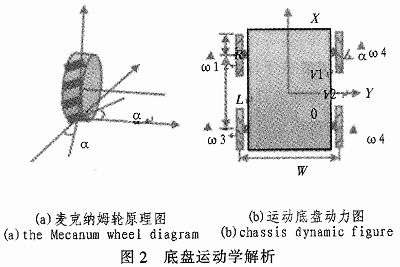

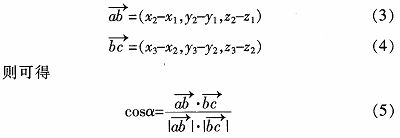

Kinect can track 20 bone points in the human limbs. The bone movement information recognition control mode refers to the system identifying the control commands by analyzing the motion data of the human skeleton points. In this paper, the left and right shoulder joint points, the left and right elbow joint points, the left and right wrist joint points, and the left and right hand joint points are used to identify the movement of the joints by recognizing the rotation angle of each joint. In this paper, the human skeleton data obtained by kinect is used to establish the spatial coordinate system with the center of the shoulder as the origin. The vector is calculated according to the coordinate construction vector of each joint point, and the joint rotation angle is obtained. The specific calculation of the rotation of the right elbow joint is taken as an example.

As shown in Fig. 3, a, b, and c are respectively the shoulder joint point, the elbow joint point, and the wrist joint point of the right hand, and the corresponding space coordinates are (x1, y1, z1), (x2, y2, z2), respectively. (x3, y3, z3), the angle of movement of the elbow joint is α. Then there is

Finally, the angle of motion of the joint can be obtained by inversely solving the trigonometric function.

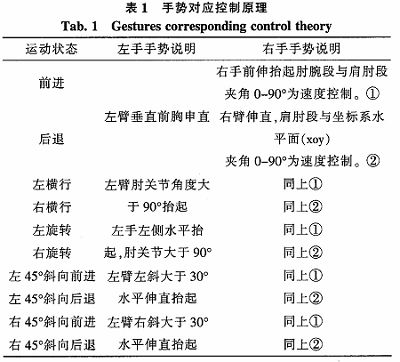

3.2 Motion posture corresponding control command

So this article uses two-handed cooperation to control the operation of the omnidirectional chassis. From the mathematical model, we can get the omnidirectional moving chassis with arbitrary trajectory movement ability, but because of the directionality of the motion trajectory, it is easy to lead to control instability, but the advantage becomes a disadvantage. From this we have streamlined the directionality of the movement so that it satisfies both the rich motility of the omnidirectional movement and the stability. See the table here. We set up 10 kinds of direction movements and split the different natural gestures corresponding to the control commands.

4 gesture recognition mode design

4.1 Background segmentation

In the gesture recognition based on the image data, it is necessary to extract the gesture of the operator. First, we need to separate the palm portion of the character from the background information. Based on the depth data extracted by kinect, this paper uses the threshold segmentation method to perform background segmentation, which is to extract the average depth value of the foreground and segment the scene. The formula for setting the depth threshold is:

Maxmax=ω+ε (6)

Among them, ω is the minimum value of the palm of the hand that can be accurately divided by the experimental measurement, ε is the adjustable value that can be freely set according to the actual application scene, and μmax is the distance space that can accurately identify the palm.

4.2 Gesture recognition

In this paper, a template matching algorithm proposed by Y-H.Lin is used to process the extracted gestures and perform gesture recognition. The algorithm first converts the extracted two-dimensional image into a one-dimensional vector, which eliminates the influence of in-plane graphics scaling and rotation. At the same time, a plurality of scale reference template vectors are constructed for the same gesture, and the extracted gesture vectors are compared with the reference template to obtain a comparison result.

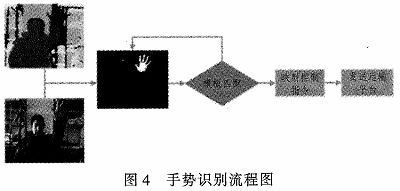

4.3 System Flow Chart

The flow chart of the system using gesture recognition for control is shown in Figure 4.

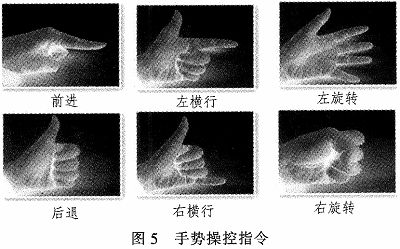

4.4 Gesture corresponding instructions

For the application scenario of the current control mode, this paper designs 6 motion instructions for the transportation platform to meet the simple and accurate control requirements of the operator process. The specific gesture corresponding instructions are shown in Figure 5.

5 Experimental analysis

The Kinect development tool used in the control system host computer is Kinect Software Devel-opment Kit (SDK) v1.8, the development environment is Visual Studio 2013, and the programming language used is C#.

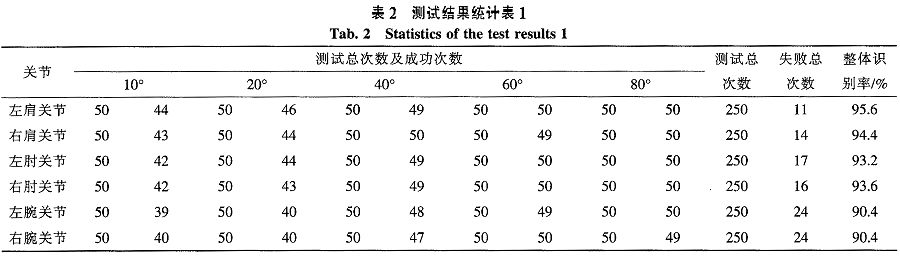

5.1 skeletal motion information recognition control mode validity test

The success of this mode of application lies in the effectiveness of the identification of the angle of rotation of the joint points of the application. Based on this, we performed a rotational angle recognition test on the joint joints of the left and right shoulder joints, elbow joints and wrist joints. The specific test method is as follows: We select 10 people whose body height is different, and the rotation angle of each joint is set to 10°, 20°, 40°, 60°, 80°, 5 cases in each case. That is, each joint is accumulated for 250 experiments, and the angle is allowed to be ±30°. Experimental results of removing accidental abnormal results are shown in Table 4. From the table, the following findings can be found: the recognition rates of the three nodes from the left and right shoulder joints to the left and right wrist joints are sequentially reduced; the greater the rotation angle, the higher the success rate of recognition. The reason for the above phenomenon is that the kinect recognizes that the angle of the human joint is related to the change range of the human body posture, and the amplitude of the human body posture of each joint point of the human body depends on the joint point as the position and the rotation angle of the joint point, so the shoulder joint has the highest recognition accuracy. The greater the angle of rotation of the same joint point, the higher the recognition rate. Despite this, the recognition rate of each joint at each rotation angle exceeds 90%, which has a high recognition success rate and meets the control requirements.

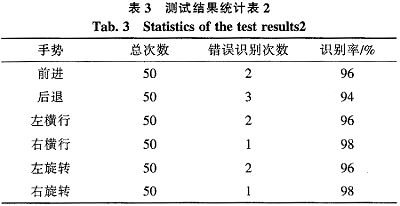

5.2 Gesture Recognition Test

For the proposed control gestures, we launched the recognition accuracy test. The specific test method was that we selected 10 people who did not use the hand to perform 10 recognition tests for each gesture, that is, 50 times for each gesture test. As shown in the table, we can find that because the gestures we use are relatively large and the number of gesture categories is small, the gesture recognition accuracy is high and meets the control requirements.

5.3 Overall Maneuverability Verification

After verifying the effectiveness of the two somatosensory control modes, in order to actually test the handling performance of the transport platform, we used black tape to lay out a scene for performing tasks on the flat ground, inviting three simple trained operators to control. The test was controlled three times using two modes, and the experiment showed that all of the three people completed all the test content, but the time and route were inconsistent, the skilled operator route was smoother than the unskilled operator, and the time was short. At the same time, limb manipulation is more time-consuming than gesture manipulation because it is more elaborate than gesture control.

6 Conclusion

In this paper, two kinds of somatosensory control modes based on kinect-based bone motion information recognition and deep gesture recognition are proposed for a omni-directional transport platform based on Mecanum wheel. It is proved by experiments that both control modes can meet the control requirements and have flexibility. And high efficiency.

Privacy statement: Your privacy is very important to Us. Our company promises not to disclose your personal information to any external company with out your explicit permission.

Fill in more information so that we can get in touch with you faster

Privacy statement: Your privacy is very important to Us. Our company promises not to disclose your personal information to any external company with out your explicit permission.